The Cabin Monitoring System also allows entertainment functions such as taking selfies to share experiences while traveling. (Image: Valeo)

By Colin Barnden

What’s at stake:

Despite the investment of tens of billions of dollars, privately-owned passenger vehicles will not offer meaningful autonomous driving features anytime soon. Therefore, what is the next big thing for the automotive and tech industries if it isn’t “self-driving”?

Irrespective of the pledges made by Mobileye and Mercedes-Benz for “eyes-off” driving and the possibility for vehicle owners to “buy their time back,” drivers will continue to keep their eyes on the road and their mind on the task of driving for the foreseeable future. To paraphrase Mark Twain, all promises to free humans of the burdens of driving, “just ain’t so.”

If the road ahead for automakers isn’t “self-driving,” then what is the next major technology innovation for the automotive industry?

The answer is what many call the “Immersive User Experience,” or IUX.

Ahead lies a battle between the giants of the tech industry that will redefine the automotive digital cockpit and the in-cabin experience, while reimagining the interface between the human and the vehicle.

Think of everything you have ever loved about the content-rich, high-definition, interactive experience of smartphones coming to your car. Finally.

Recommended: Optimizing HUD for My Buddy, the Car

IUX defined

The IUX will combine eye-gaze, gesture, and voice control with capacitive and haptic touch inputs. High-definition displays will extend from pillar to pillar right across the vehicle dashboard, providing driving information and streaming video. Add-in 5G cellular connectivity, cloud computing and over-the-air updates, and the interior of the car will never look or feel the same again.

An augmented reality head-up display will transform the windshield into a human-vehicle interface that projects route guidance and safety critical information directly into the driver’s line of sight, ensuring they can drive while keeping their eyes and attention fixed on the road ahead.

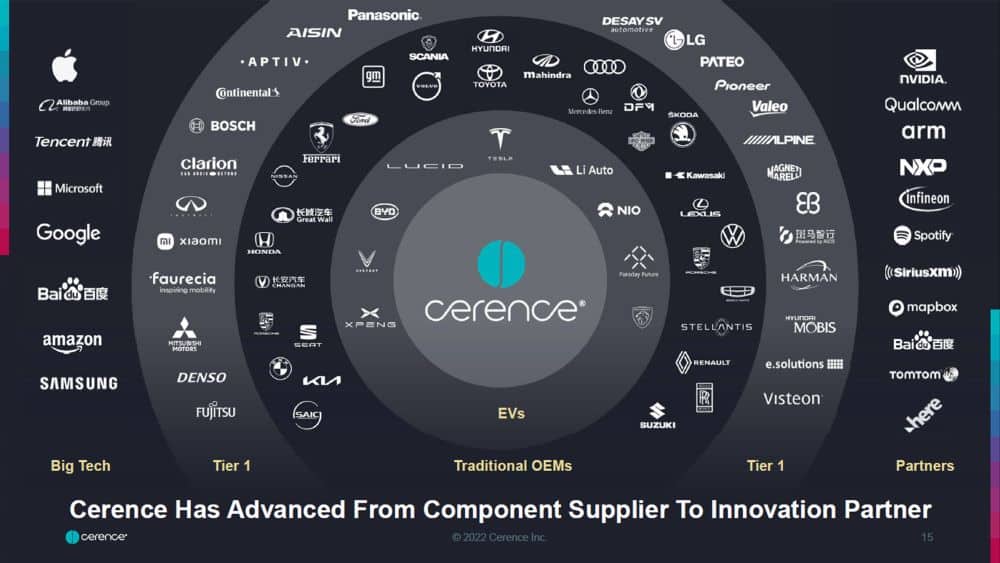

The stage now looks set for an epic race between Apple, Amazon, Cerence, Google, and Microsoft to completely rethink and redesign the automotive in-cabin experience.

As Cerence showed at its investor day last November, interest also includes Alibaba, Baidu, and Tencent, ensuring a truly global race ahead encompassing big tech, automakers and established tier-1 suppliers.

Although “self-driving” is not now part of the near-term future for the automotive industry, the expectation of fully autonomous operation gave rise to the development of SoCs with previously unheard-of levels of processing power, combined with massive AI and deep learning accelerators.

High-performance processors such as Qualcomm’s Snapdragon Ride Flex SoC and Nvidia’s Drive Thor look set to be harnessed by system developers to power the next-generation of in-cabin and vehicle cockpit features.

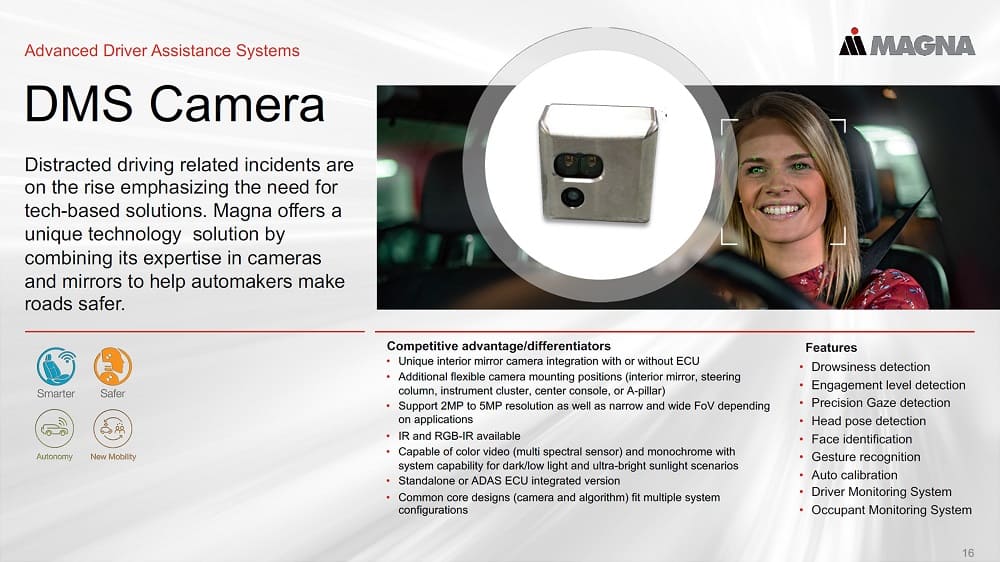

Eye-gaze and gesture recognition will be provided by face and eye-tracking companies like Seeing Machines. Gaze tracking has already morphed far beyond simply monitoring the driver for signs of distraction and drowsiness. The next frontier is all-occupant interior sensing, and the rear-view mirror has emerged as the key integration point, as shown by Magna’s occupant monitoring mirror at CES.

Driver monitoring is not “nanny tech” which spies on and nags the driver, but instead is safety technology which sees and understands human behavior as it applies to crash risk. From its work in the aviation sector, Seeing Machines has gained vital experience combining precision eye tracking with human factors research to understand real-time glance pattern analysis of flightdeck instrument scanning.

This work led to real-time measurement of pilot workload, which when transferred into the automotive environment becomes a measure of driver workload.

The immersive user experience, combining integration of displays both on the dashboard and on the windshield, is therefore made safe by constantly measuring driver workload to prevent cognitive overload. Technology from the flightdeck is heading for the car’s cabin to make driving safer and more enjoyable.

Recommended: Unlocking Apple Car’s Supply Chain

Apple iCar?

Today it is not possible to buy a car or truck which provides an immersive user experience, but we are likely to see concepts revealed by every automaker over the coming year.

CES 2024 is certain to feature demonstrations from multiple suppliers, as the realization hits home that the billions of dollars spent on “self-driving” will not lead to a suitable return on investment and that better and bigger opportunities exist by providing exciting new features and services in the vehicle cabin.

While rumors abound of an Apple iCar, don’t expect it to offer autonomous driving. Instead, when we finally see it, the iCar will almost certainly amaze us with integration of the in-cabin technologies discussed here.

And just as with iPhone, consumers will race to buy iCar. Legacy automakers, you have been warned.

InCabin

The immersive user experience is in the very early stages of the development cycle. We don’t yet know how the pieces fit together, but we can start to see the key components, and who are the critical players.

The trend will be discussed at InCabin, an event to be held from March 15-17 2023 in Scottsdale, Arizona. The conference will include a panel session called “Imagining the Immersive User Experience,” featuring Prateek Kathpal, CTO of Cerence, Colin Barnden, principal analyst at Semicast Research, and moderated by Junko Yoshida, co-founder and editor-in-chief of The Ojo-Yoshida Report.

Bottom line:

Expect automakers, tier-1 suppliers, and the wider big tech industry to deploy billions of dollars on research and development and mergers and acquisitions to secure pole position in the race to develop the immersive user experience.

Colin Barnden is principal analyst at Semicast Research. He can be reached at [email protected].

Copyright permission/reprint service of a full TechSplicit story is available for promotional use on your website, marketing materials and social media promotions. Please send us an email at [email protected] for details.