Source: Moshe Zalcberg

By Moshe Zalcberg

“No politics!” we agreed among the panelists before we went on-stage for the DVCon US 2025 panel on “Are AI chips harder to verify?”. (Even if we all also agreed that politics today, in the US and virtually everywhere is too annoying/dramatic/amusing – chose your preferred option – to be ignored).

And then, a few good minutes into the panel, it happened. AMD’s Ahmad Ammar mentioned that in order to properly verify AI chips, verification engineers need to be called in to give their inputs from day-1.

So I said “We’ve agreed not to talk about politics, but it seems we may need to talk about organizational politics instead”. Traditionally, projects were defined by architects, then committed to RTL by designers, and verification engineers came at the very end of the food chain. If they had a challenge at this stage, they’re told “deal with it!”.

Is it changing now, driven by the complexity and scale of today’s AI devices?

“Absolutely” said Harry Foster, Siemens’ Chief Verification Scientist. “I have a background in HPC chips and in those days, at least the expected values were deterministic. With AI at the combined chip and application level, you don’t even know what the expected values are and you need to take this into consideration from the start”.

“In our case” added Stuart Lindsay, a Verification Methodologist with Groq, “the very architecture of the chip – among other things – makes verification more straightforward, as it doesn’t have to deal with cache coherence”.

“Indeed, verification architects should and are being invited to the very initial design reviews,” added Shuqing Zhao, from Meta.

“And not only verification, but all stakeholders, including virtual model people and firmware engineers”, concluded Shahriar Seyedhosseini, from MatX.

Design for verification, stage one

I immediately jumped in and said: “So I’d like to establish the concept of ‘Design for Verification’ as a new and emerging trend – similar to “Design for Test” and “Design for Manufacturing” – and to claim trademark on it!”

Harry cooled my enthusiasm: “Moshe, such term exists for a long time, it’s nothing new.”

Harry is indeed an experienced verification professional and a long-time observer and chronicler of this industry, so he’s obviously right. The “Design for Verification” concept does exist – there is even a Wikipedia article about it, that quotes a paper authored in 2016 by Andrew Francis, a mechanical engineer – focused on aerospace manufacturing and other complex industries.

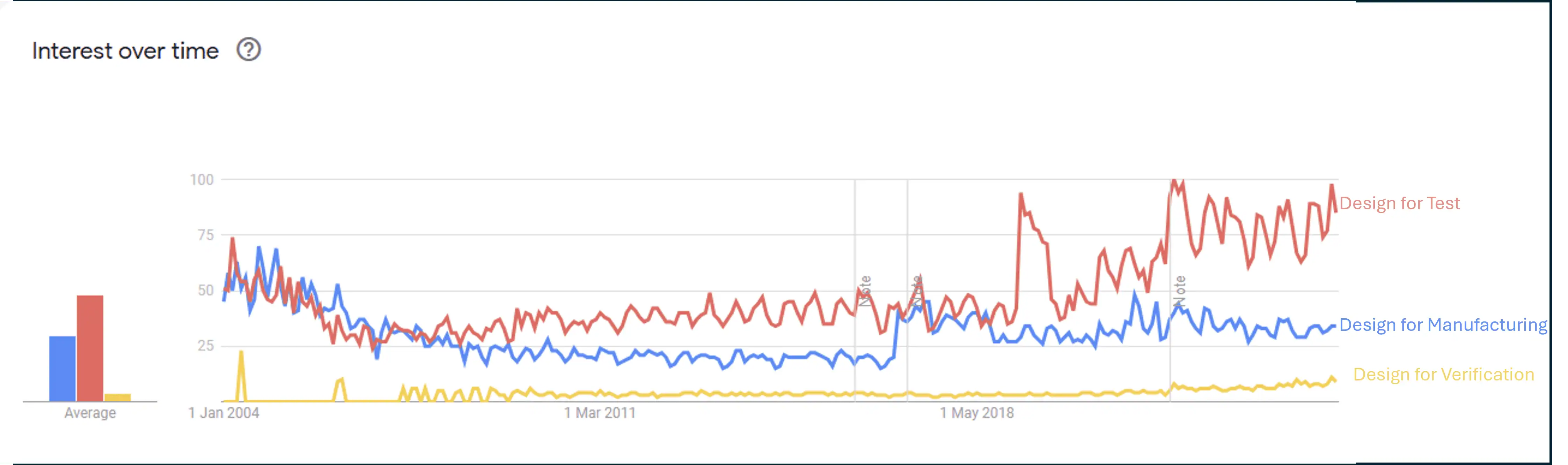

Yet, while the concept isn’t new, its implementation in semiconductor design remains nascent. Based on Google-trends graphs, “Design for Verification” usage is nothing close to its cousins “Design for Test” and “Design for Manufacturing”.

So what exactly does Design for Verification entail in the context of modern chip design?

Design for Verification: A strategic approach

- Direct Architecture Considerations: Verification requirements directly influencing critical design parameters, compelling architects to carefully consider block sizes, bus architectures, data and memory access patterns, and hardware/software cooperation. This approach ensures that verification challenges are proactively addressed through deliberate architectural choices that optimize testability and observability.

- Modelling and Virtual Prototyping: Virtual prototyping takes a front-seat in evaluating architecture exploration and performance analysis of AI chips, driven by the “clean-sheet” nature of many AI structures. These techniques become critical as often the only means to define expected results across chip boundaries and internal states, providing a crucial reference point for verification efforts.

- Multi-Level Abstractions: To manage the mounting scale of AI chips, sophisticated techniques must be applied to “stub” certain parts of the Design Under Test (DUT) with higher levels of abstraction. This approach, often enabled by advanced modeling techniques, allows verification teams to handle increasingly complex chip designs by strategically simplifying and isolating specific components.

- Hardware-Software Co-Verification: Unlike traditional SoC designs with software running on one or few cores, AI chips typically involve software operating on multiple cores concurrently, with direct impact on device performance and functionality. Consequently, the strategy for verifying hardware and software operation must be comprehensively defined from the earliest stages of design.

- Design Parametrization: Given the immense scale of AI chips, verification strategies must incorporate techniques that allow designs to be scaled up and down effectively. This approach enables verification of representative subsets across different verification methodologies, including simulation, formal verification, and emulation.

(In fact, Veriest presented a paper at DVCon dealing explicitly with this topic) - Chiplet-Related Aspects: Large AI chips increasingly rely on multi-chiplet architectures, introducing a complex new dimension of verification challenges that must be considered during early architectural planning. These challenges require careful consideration during design partition decisions, ensuring comprehensive verification strategies that account for inter-chiplet interactions and communication.

- System Workload Verification: As highlighted repeatedly during the panel, AI chip projects often fail not due to individual chip functional bugs, but because the entire system — typically comprising thousands of chips — cannot effectively handle specific workloads. The complexity of performance, latency, and power analysis necessitates a holistic verification approach that must be integrated from the project’s inception.

Design for Verification transforms verification from a reactive testing process to a proactive, strategic design element, ultimately reducing risk and accelerating semiconductor innovation.

Given the escalating complexity of current AI (and other) chips, are we finally witnessing the emergence of “Design for Verification” as a critical engineering imperative for semiconductor companies? The panel’s insights suggest we’re at an inflection point where verification is no longer an afterthought, but a fundamental consideration from the earliest stages of chip design.

Moshe Zalcberg has more than 20 years of experience in the semiconductor and design automation industries, having spent over 12 years of his career at Cadence Design Systems, in roles that include General Manager, Israel and European head of Professional Services. In his current position as CEO of Veriest Solutions, a leading ASIC services company, as well as a Partner at Silicon Catalyst, Moshe is exposed to a range of semiconductor projects and a variety of design & verification approaches. Moshe is an electrical engineering graduate of the Technion Israel Institute of Technology and holds an MSc in Electronics and an MBA, both from Tel Aviv University.

Copyright permission/reprint service of a full TechSplicit story is available for promotional use on your website, marketing materials and social media promotions. Please send us an email at [email protected] for details.