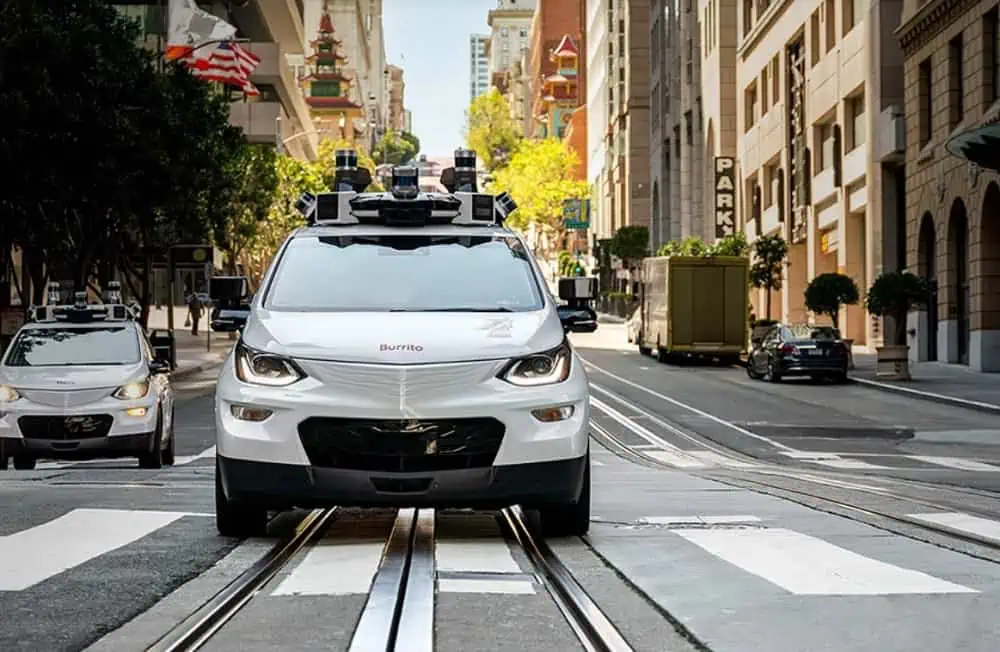

Cruise in San Francisco (Image: Cruise)

What’s at stake:

Does a crash that severely injured a pedestrian in San Francisco mark a turning point in the flawed deployment of robocars on city streets?

AV developer Cruise’s decision to replace an in-vehicle human monitor with a “remote assistance center,” a contributing factor in an accident that severely injured a pedestrian in San Francisco, underscores the reality that driverless vehicles attempting to navigate city streets remain inherently unsafe.

We have examined the technology issues related to the October 2023 crash during which a Cruise AV dragged a pedestrian about 20 feet at speeds up to 7.7 mph, according to post-accident reports. The pedestrian was initially struck by another oncoming vehicle, then pushed into the path of a Cruise AV. Programmed to react to a side impact, the AV executed a pullover maneuver intended to clear the street, doing so with a pedestrian under the vehicle.

Absent a safety, or “valet,” driver, Cruise contractors in Arizona linked to the vehicle did not detect the incident or the victim until the pullover maneuver and the dragging were initiated, AV safety expert Phil Koopman noted in his analysis of post-crash reports commissioned by Cruise.

The eerily familiar focus of the Cruise in-house investigations centered on how, not why, the October 2023 crash occurred.

Soon, but not soon enough, Cruise personnel at the scene and the Cruise Remote Assistance Center, or CRAC, in Arizona realized they had a serious pedestrian injury on their hands. Koopman characterized Cruise’s failed response, which soon focused on managing the crash narrative in anticipation of a media and regulatory firestorm, as “a failure to proactively disclose material bad news.”

As with other high-profile accident investigations, the eerily familiar focus of the Cruise in-house investigations centered on how, not why, the October 2023 crash occurred. Why, for example, did the analysis commissioned by Cruise not address safety steps for preventing future accidents involving driverless vehicles?

Damage control

Cruise commissioned two crash reports by Quinn Emanuel Trial Lawyers and engineering consultant Exponent. Exponent’s root-cause analysis of the Oct. 2 crash was heavily redacted when released. Much of the Quinn Emanuel report focused on “who knew what, and who said what to whom [and] when,” Koopman noted in his analysis of the accident report.

He credits Cruise with establishing a War Room in response to the crash and communicating with San Francisco emergency officials and state regulator. However, it has since emerged that the company was less than forthcoming in detailing what happened.

Reuters reported that a Cruise official did indeed notify California regulators a day after the crash, but omitted mention of the Cruise AV pullover maneuver that dragged and severely injured the pedestrian.

Koopman does fault Cruise for quickly narrowing the focus of its crash investigation, adopting a “overly defensive” posture rather than seeking answers, and therefore lessons, that could be used to boost AV safety.

Nor did the company suspend fleet operations during its investigation, maintaining that the Oct. 2 crash was a one-off, or to use the preferred technical euphemism, an “edge case.”

Cruise remote operations are unsatisfactory and need close scrutiny by regulators.

Missy Cummings

Then there’s the question of why Cruise eroded its operating margins by removing a “safety supervisor,” that is, a backup driver in the vehicle. Koopman concludes the removal “seems motivated by business and publicity interests….” The result was bad publicity, perhaps even a turning point in the fraught efforts to operate driverless vehicles on U.S. city streets.

Other safety experts have weighed in on the Oct. 2 crash investigations, noting the apparent failure of remote operators to communicate accident details in a timely fashion. Missy Cummings of George Mason University’s Center Autonomy and Robotics Center emphasizes that Cruise confirmed to regulators that remote operators were quickly able to detect the trapped pedestrian under its AV via vehicle sensors.

However, the remote assistants waited weeks to pass along those vital accident details. “It defies logic that the CRAC would not be in immediate contact with [incident responders], given that the mission of the CRAC is to be the first line of defense in an accident.”

Concludes Cummings: “This single issue is a major red flag that indicates Cruise’s remote operations are unsatisfactory and need close scrutiny by regulators.” Cummings shared her commentary on the Quinn Emanuel Report in her paper.

Accident scenarios

Despite ostensible safety measures like the disastrously executed pullover maneuver, Cruise developers say that they simply could not anticipate the scenario that occurred last fall.

It recalls the most harrowing accident of the space race: the oxygen tank explosion aboard the Apollo 13 command module in April 1970.

During intensive astronaut training, NASA flight controllers would throw every conceivable scenario at crews to gauge their reaction. But the flight controllers never envisioned a scenario in which an exploding oxygen tank 200,000 miles from Earth would result in a quadruple failure that nearly doomed the crew. In this example, the safety net was the lunar module that served as a lifeboat and a collection of dedicated flight controllers who “worked the problem.” Step by agonizing step, they guided the crew home.

As the accident in San Francisco highlights, Cruise clearly lacks comparable safety margins. If the GM unit decides to resume robotaxi operations on the streets of a major American city, state regulators must insist at the very least that Cruise reinstate a safety or valet driver in its AVs.

Bottom line:

The Cruise remote assistance center was intended as a first line of defense in an accident involving one of its AVs. It failed. Unless and until those remote operations are fixed, and other remedial steps like reinstating safety drivers in Cruise AVs instituted, autonomous vehicles remain unsafe in urban settings.

Related stories:

George Leopold, a frequent contributor to the Ojo-Yoshida Report and host of the Dig Deeper Sustainability video podcast series, is the author of Calculated Risk: The Supersonic Life and Times of Gus Grissom. He and colleague Matthew Beddingfield are at work on a book about the Apollo 1 fire.

Copyright permission/reprint service of a full Ojo-Yoshida Report story is available for promotional use on your website, marketing materials and social media promotions. Please send us an email at talktous@ojoyoshidareport.com for details.